Upgrading the k8s cluster at LibreTexts

Hey! Long time no see. Sorry for ditching this blog for like 8 months, but hopefully I'll be a tiny bit more active here. I'd catch you up on life in general, but 1. you probably aren't here for that and 2. this is just some technical stuff to get me back into writing blog posts.

The tl;dr is I actually found a summer internship / part time job as a site reliability engineer for LibreTexts. We operate a 19-node kubernetes cluster and it's been "fun" maintaining it and fixing the issues. Well, if your definition of fun includes spending more than 15 hours on a very weird issue where HTTP traffic is offered through port 443 due to very weird reverse proxying setups causing LetsEncrypt certificate validation to fail combined with a dashing of strict SNI enabled by default without any logging. In all seriousness though, I am actually having fun.

I'm also tasked to upgrade the entire cluster during some scheduled downtime, and I figured this is a pretty good opportunity to write something for the blog, as well as document my progress as I'm doing it so I can look back if something goes wrong. With that said, here goes nothing.

Upgrading the k8s cluster

Prep

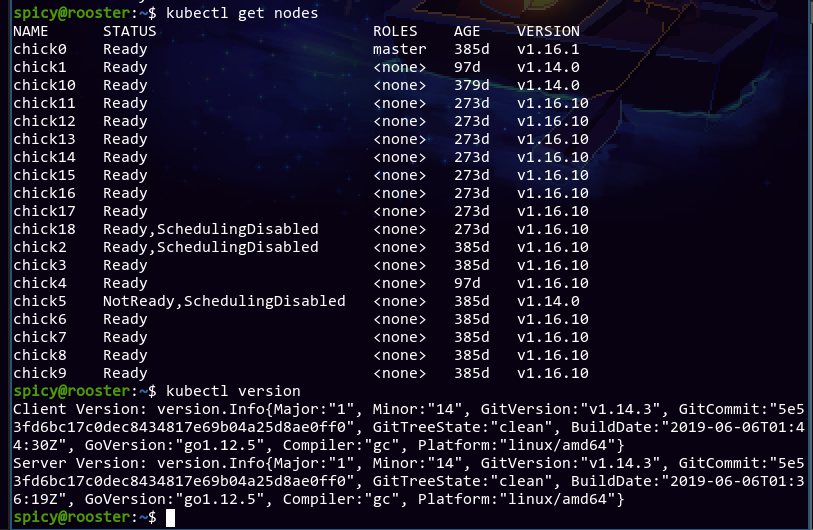

After doing some kubectl get pods and verifying no one's using any services on the cluster, the first thing I'm doing is to upgrade the k8s control plane, since that's the main step I'm not familiar with. Here's some baseline info:

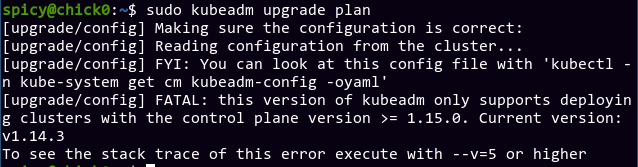

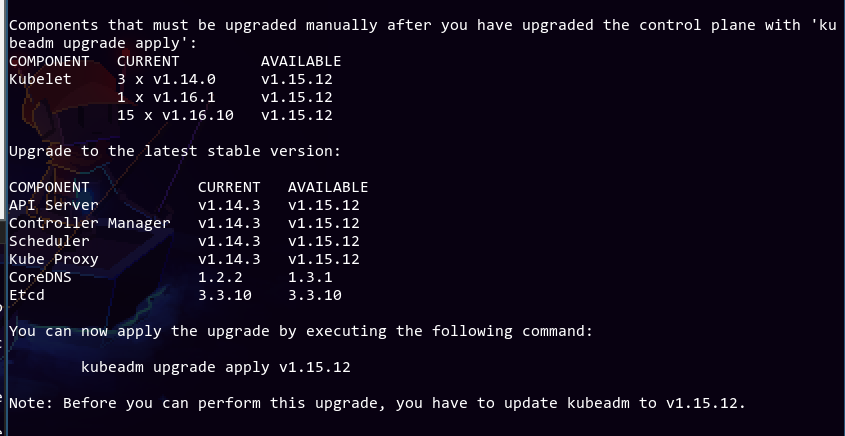

I realize it's not necessarily good practice to upgrade individual kubelets before the control plane, but it's already done so eh. The control plane is at v1.14.3, and I plan on upgrading it all the way to 1.18. To plan the upgrade, I ran kubeadm upgrade plan on the master node:

Yeahhhh... it's also good practice to hold kubelet, kubectl and kubeadm at the same version. About that... *sweatdrops

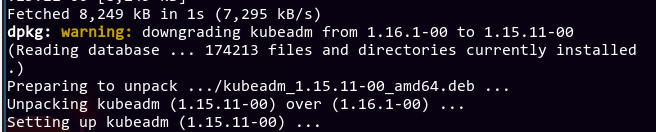

Anyway, let's manually downgrade kubeadm so we can upgrade the control plane one minor version at a time by running apt list -a kubeadm to find what versions are available, then by doing an apt install kubeadm=1.15.11-00 to install that specific version.

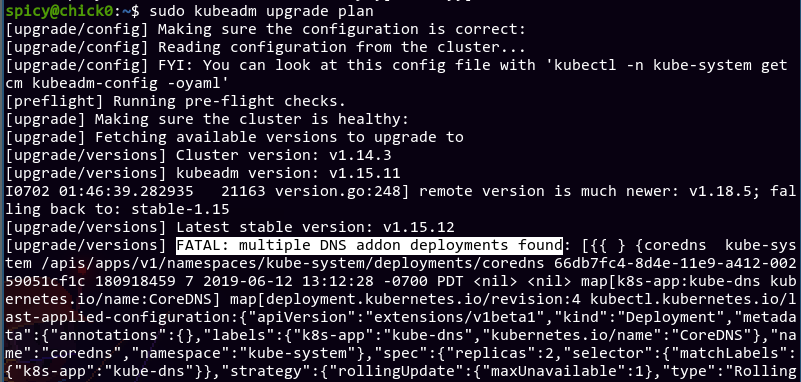

Now that's over with, let's try that again, shall we?

Uh... what?

Fun times with k8s DNS

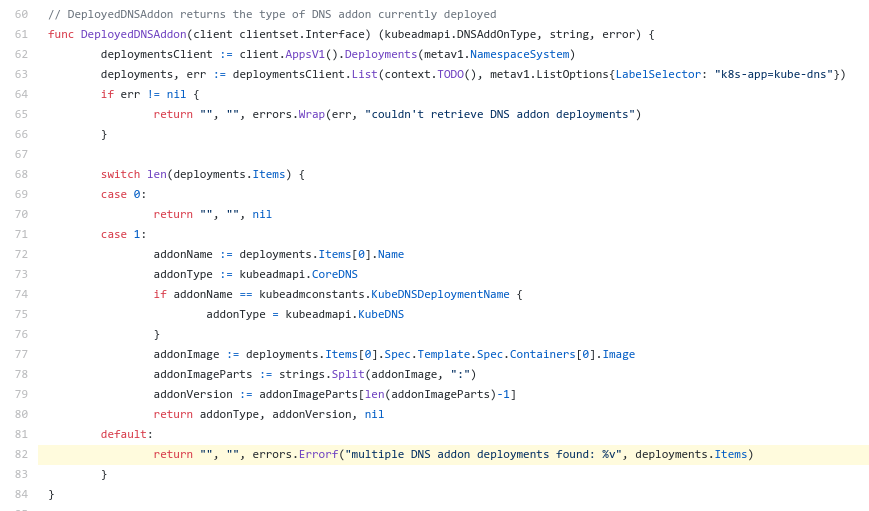

After a bit of Googling, it doesn't look like anyone else has this error either. I went to the kubeadm source code to find the culprit:

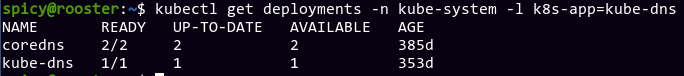

Does that mean we have more than 1 deployment in the kube-system namespace with label k8s-app=kube-dns?

Huh. I'm mostly confused at this point, since I haven't even heard of kube-dns (I always assumed coredns is the one providing DNS for the entire cluster), and it's newer by around a month somehow. From Google, it seems that CoreDNS provides better performance, and has been the default since k8s 1.11. I have no idea why kube-dns would even appear here, quite frankly.

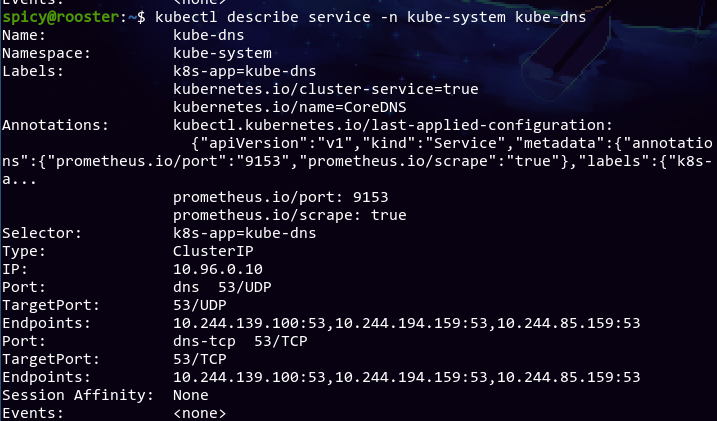

I hoped looking at the kube-dns service would give me some hints as to what's going on:

The kube-dns service in the kube-system namespace has three labels: k8s-app=kube-dns, kubernetes.io/cluster-service=true, and kubernetes.io/name=CoreDNS, which confused me even more since the names kube-dns and CoreDNS don't really help me find out what's actually being used. The selector is k8s-app=kube-dns, so it implies CoreDNS is just not used. Problem solved?

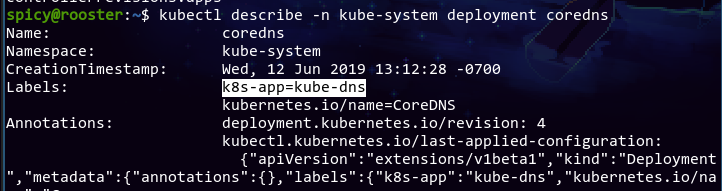

The coredns deployment, for whatever reason, has the label k8s-app=kube-dns. Thanks a lot. It turns out the kube-dns deployment also has the same label, so maybe this entire time the cluster had two DNS services, and it's pretty much up to chance which one gets the DNS requests.

During the Google search, I also found something called "addons", and apparantly CoreDNS is an addon you can install to the cluster. There're also references to an "addon manager", but I can't figure out how to list addons (doesn't look like it's even possible? Mentions of /etc/kubernetes/addons but that folder doesn't even exist on the master node? An addon manager that seems like it's an addon itself?)

Anyway, since CoreDNS seems to be superior, kubectl cluster-info says CoreDNS is running at ... instead of saying kube-dns, and it looks like kube-dns is just one single deployment with no other components (yes, there's a Service, but it's being used by CoreDNS apparantly), I have opted to just back-up the deployment using kubectl get -n kube-system deployment kube-dns -o yaml > kube-dns-deployment-backup.yml and then deleting it using kubectl delete -n kube-system deployment kube-dns. Fortunately, pods in the cluster seems to still have DNS after this step (verified by getting into a random pod using kubectl exec then trying to ping domain names), so I think I got away with it.

Phew, now that's over with, let's continue with our upgrade!

Upgrading to 1.15

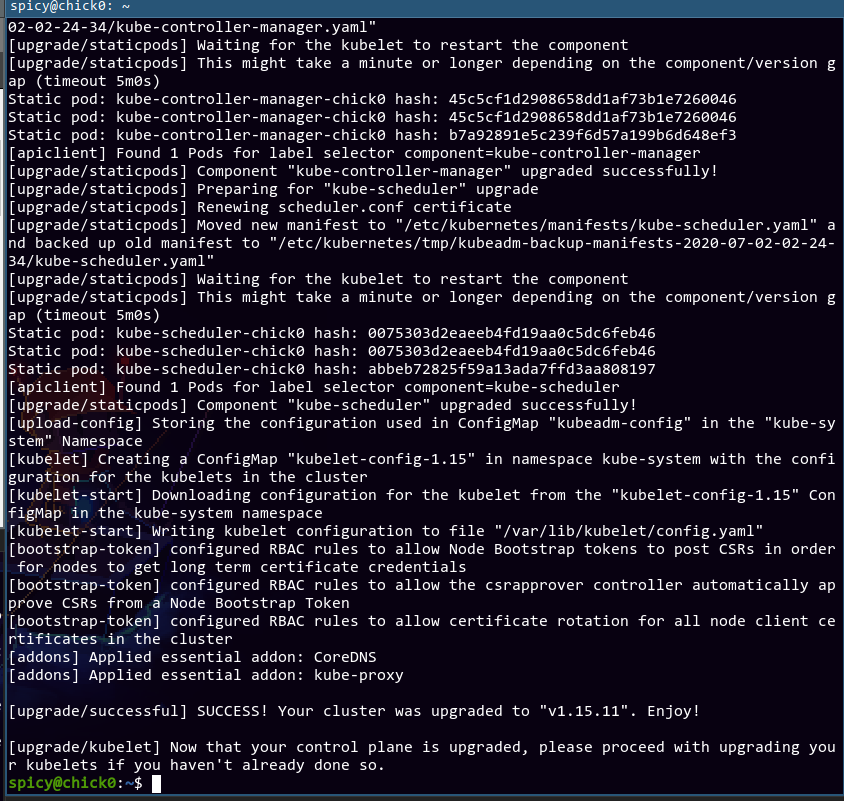

Cool, no significant issues. However, we don't want to upgrade to v1.14.10, and upgrading to v1.15.12 seems to require kubeadm v1.15.12 which doesn't exist in apt for some reason? Instead, I opted to upgrade to 1.15.11 using kubeadm upgrade plan v1.15.11. We're only staying on this version for a bit anyway. Let's go!

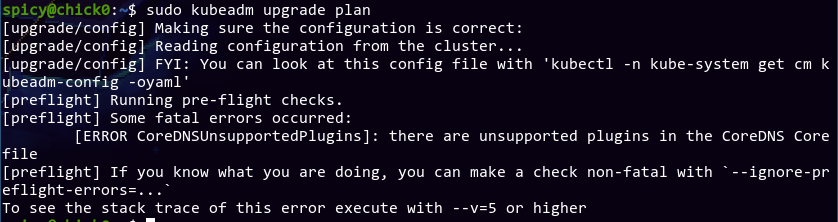

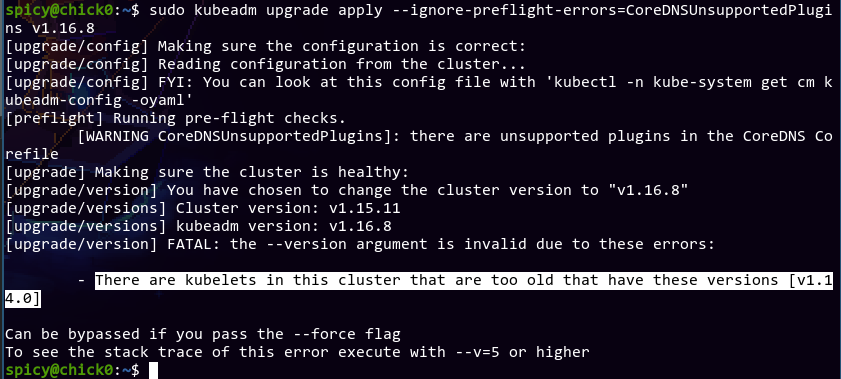

I omitted a bunch of output, but the tl;dr is the upgrade seems successful. Rather than upgrading the kubelets, I opted to just push forward with the control plane upgrade. I upgraded kubeadm using apt to 1.16.8-00, then repeated the kubeadm upgrade plan process again. Now that I resolved that DNS issue, surely this time it will go as expected?

Well then. Can't say I didn't expect this. Oh well.

Even more fun with DNS!

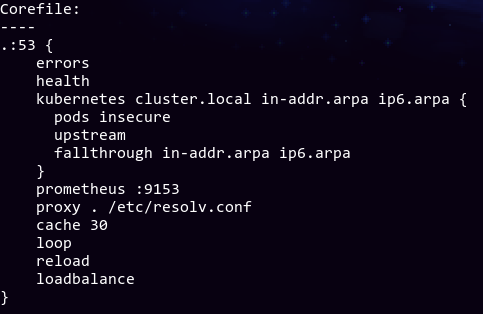

Fortunately for me this time, searching online actually showed me a GitHub issue about this problem. Even better, it seems that my CoreDNS configmap is the exact same as the person who made the issue:

The issue says that the proxy plugin in the config is deprecated, and the forward plugin is used in its place. It should be dealt with automatically during the upgrade, and they can ignore the preflight error safely. Since I don't have any other directives compared to that person, I think it's fine for me as well. I did a kubeadm upgrade plan --ignore-preflight-errors=CoreDNSUnsupportedPlugins v1.16.8, and everything went through fine. Time to apply the upgrade?

Breathe. Baby steps. Time to upgrade chick1 and chick10 before fully upgrading the cluster, I guess.

Digression: upgrading chick1 and chick10

I'm just following the preexisting procedure to upgrade the systems, excluding the holding kubelet package part (I just went all the way to 1.18.5, the latest version). Now that's finished, we can finally go ahead with the upgrade, right?

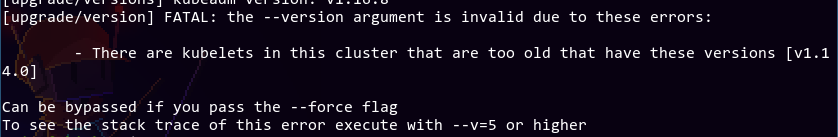

Nope, I still get the same error. Looking at kubectl get pods, it's probably that chick5 is stuck at v1.14, and the cluster is waiting for it to be upgraded. That machine is down (I'm not entirely sure what happened to it other than it's powered off), so I can't upgrade it.

Removing chick5

I'm still a bit uncomfotable using --force on commands, in case it ignores a different issue that I don't want to ignore. Therefore, I opted to just remove chick5 as a node from the cluster entirely. After all, it's currently offline so it's not like we're losing anything, and I can just join it back using kubeadm join later. Therefore:

F in the chat.

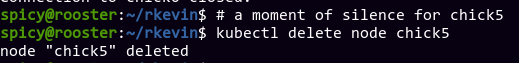

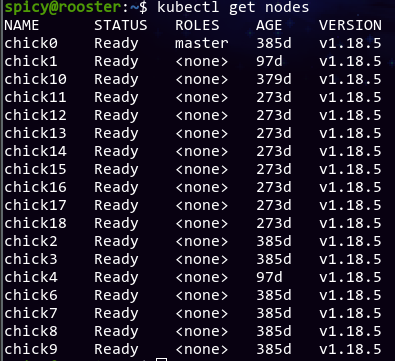

Finish upgrading the control plane

The kubeadm upgrade apply --ignore-preflight-errors=CoreDNSUnsupportedPlugins v1.16.8 executes without a problem. I then rinsed and repeated the process by installing kubeadm 1.17.4, planning the upgrade, then applying it, without a problem. One final iteration of the cycle (after upgrading all kubelets on all nodes to 1.18.5 first), and the entire control plane is now at v1.18.5. Glad that went by without any huge problems.

After rebooting all the nodes, everything looks clean. Great!

Other upgrades

Upgrading all Ubuntu packages on chicks

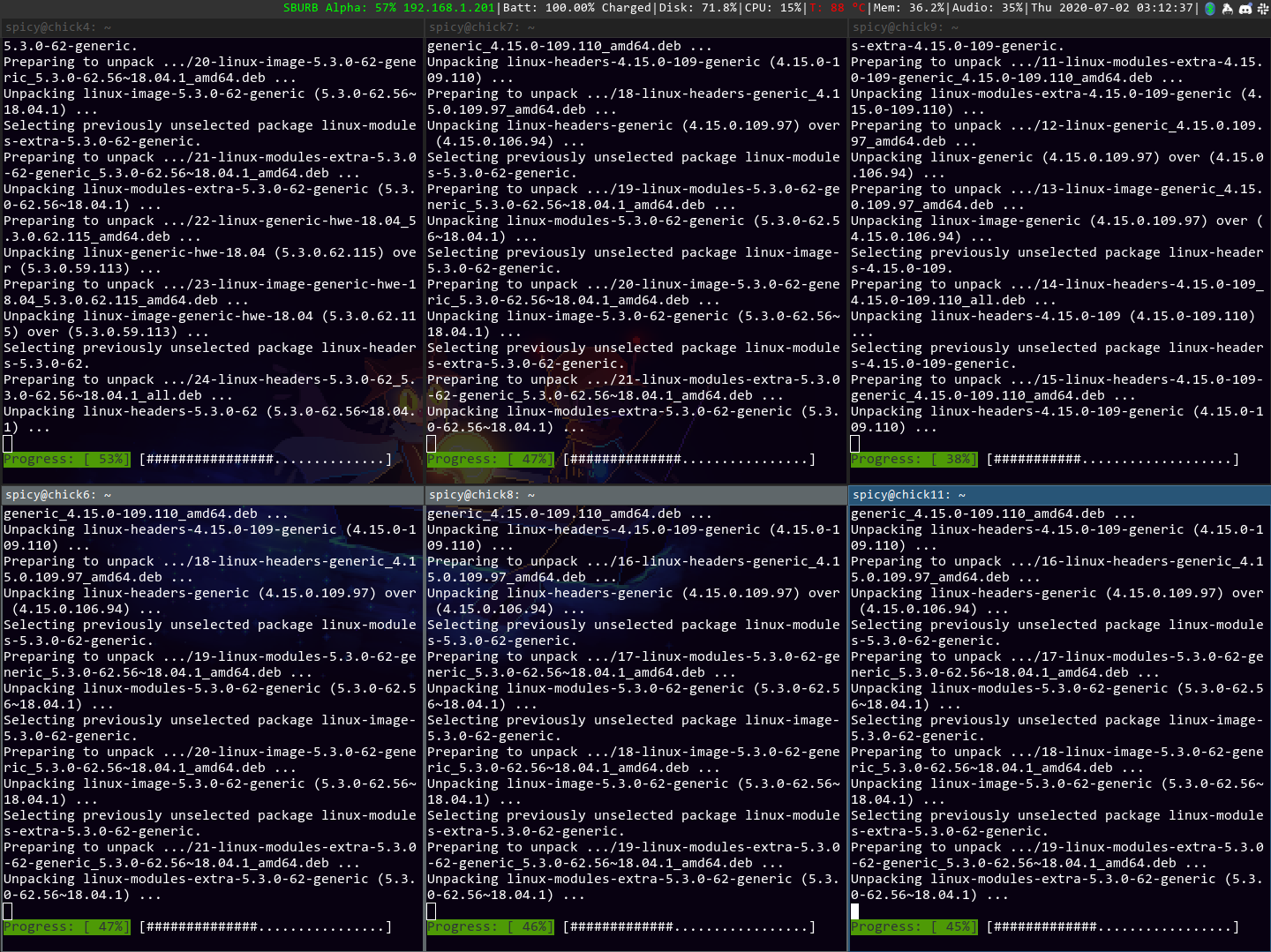

I did this along with upgrading the control plane, since it needed higher versions of kubelet, so I just went ahead with full apt update && apt dist-upgrades for everyone. I didn't bother cordoning or draining the nodes, since it's expected that the cluster is going to have downtime.

Also, watching this is glorious.

Upgrading kubectl on rooster

The kubectl on rooster seems to be installed manually into /usr/local/bin, so I removed that and just used apt to install it (after adding the kubernetes apt repo). This way, we don't have to manually upgrade it.

Upgrading packages on rooster

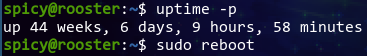

Eh. Your standard old apt update && apt dist-upgrade. Nothing fancy here.

Also, RIP the 44 weeks of uptime. About time we rebooted to use a more up-to-date kernel, though.

Disaster strikes

NOTE: This is written post-mortem, since when this happened my first thought is to fix the issue, not to write this blog post while fixing the issue. I also lost some command output that I can screenshot because journalctl's less would eat some terminal history.

Cool, everything seems to be working. Let's try accessing the staging cluster and see how it goes!

*some time later*

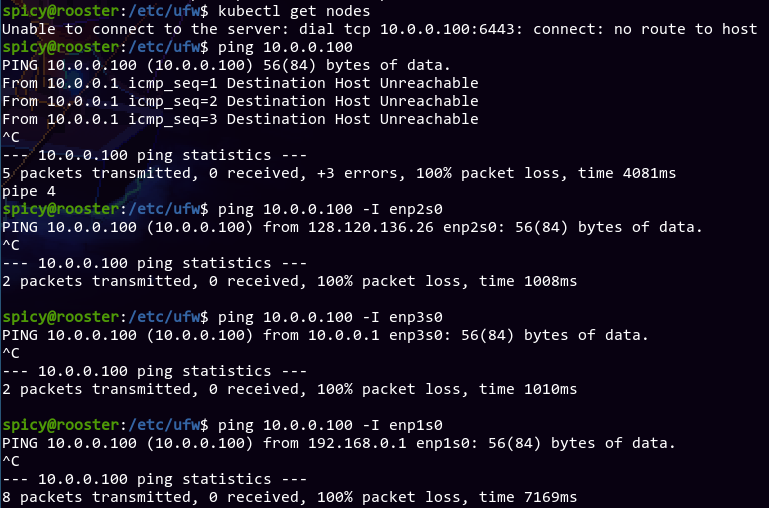

Hmm. Doesn't seem to be up. Let's check kubectl!

*after noticing kubectl hangs for a long time*

Um... is the cluster up?

um...

UM...

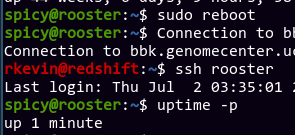

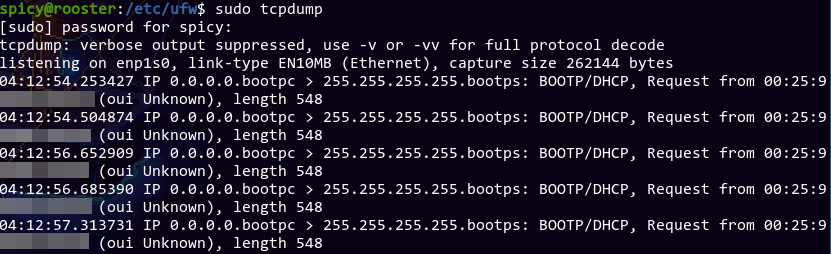

After some time panicking and worrying that I might've caused all the nodes to break, and they might require a power cycle (which is difficult since COVID-19 means no one can get to them easily), I decided to calm down and see if I can get to anything. After using nmap to make sure no IP in the entire subnet would respond to pings, I decided to try using tcpdump and see if anything's alive.

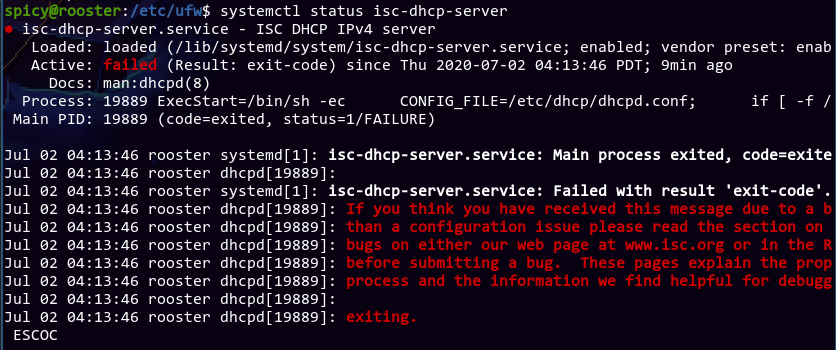

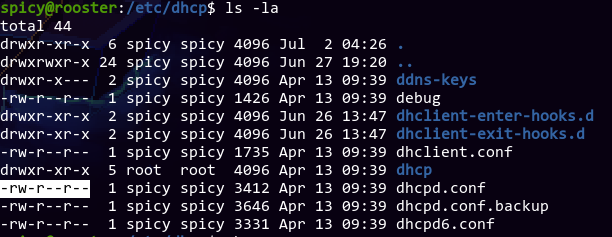

Oh cool, at least some stuff is alive. It seems like a bunch of different devices are looking for DHCP, and I remembered rooster is supposed to be the DHCP server. So, what happened to the DHCP server? I tried doing a systemctl restart on it, but it's still not working.

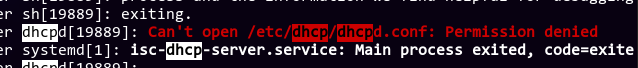

Huh. I wonder why dhcpd failed. Maybe a config issue? Let's check journalctl, and use / to search for dhcp:

Interesting. I wonder why it's set to not world readable---

Wait, the file is already world readable! Maybe dhcpd needs to write to it somehow? I identified that dhcpd runs as the dhcpd user, so maybe we can give it write permissions?

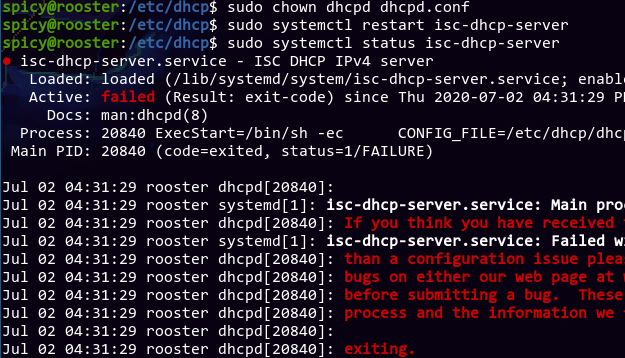

That didn't work. Why can't it read a file that should be world readable? After a couple of minutes, I finally noticed an inconspicuous log entry right above the permission denied message:

Jul 02 04:34:22 rooster kernel: audit: type=1400 audit(1593689662.619:26): apparmor="DENIED" operation="open" profile="/usr/sbin/dhcpd" name="/home/spicy/metalc-configurations/dhcp/dhcpd.conf" pid=21140 comm="dhcpd" requested_mask="r" denied_mask="r" fsuid=0 ouid=111

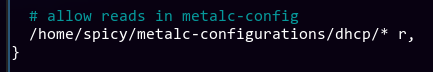

Ah! It's apparmor that doesn't like dhcpd. We have a private git repo with all the configurations for all the services, and we symlink folders in /etc to point to this repo. Therefore, /etc/dhcpd/dhcpd.conf is actually symlinked to /home/spicy/metalc-configurations/dhcpd/dhcpd.conf. This means that to apparmor, it looks suspiciously like dhcpd is compromised and is trying to access files that it shouldn't have. To fix this, all we need to do is to add an extra rule to /etc/apparmor.d/usr.sbin.dhcpd:

After a systemctl restart apparmor and then restarting DHCP, the nodes slowly come back up again! Phew, disaster avoided.

Now, back to our regularly scheduled program

Fixing networking issues after upgrade

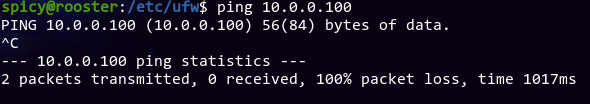

The same thing happened again. This happened during the last upgrade of kubelets as well. Pretty sure it's just kubelet restarting and docker not cleaning old stuff up. To solve this, I just ran docker system prune -f on every single chick. (Added benefit of saving a crap ton of disk space!)

Upgrading NFS server

The NFS server is running CentOS, so I did a yum check-update followed by a yum update, then a reboot. Nothing fancy.

Helm charts

I upgraded some helm charts to the latest stable version. I'd like to say there are no issues and everything is smooth sailing, but then I'd be lying. None of the issues were big, though.

- I had the same issue as the one I spent a ridiculous time on previously, but that's because I made a typo in the nginx config that fixes the issue, which is now properly fixed.

- I also had some trouble upgrading one of the JupyterHubs due to this error, but that got solved easily once I manually delete the autohttps deployment.

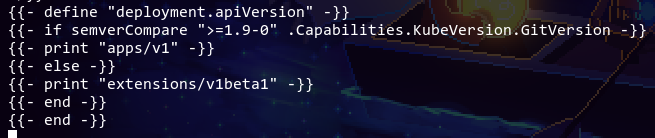

For two of the helm charts, I had the same issue as this StackOverflow. I thought I should modify the helm chart to use

apps/v1and the helm chart was out of date, but that turned out to not be the case:

I ended up just uninstalling and reinstalling the helm charts entirely. Worked like a charm. My guess is that the old version of the helm chart used

extensions/v1beta1, so when helm tries to diff the difference it freaks out because it doesn't understand the old manifests.Since I reinstalled the nginx ingress, I had to reconfigure its LoadBalancer IP since we're using MetalLB. Since it kept saying external IP is pending, I opted for the lazy way and just did

kubectl patch service [NAME_OF_INGRESS_CONTROLLER] -p '{"spec": {"externalIPs":["10.0.1.61"]}}', since10.0.1.61is the old IP, so we don't need to change anything.

Conclusion

With that, the cluster upgrade is complete! It took a bit less than 8 hours in total (including the time it took to write this). Upgrades could've went smoother, but to be fair solving these types of issues is what a sysadmin/SRE should be doing anyway. Fortunately, even with all these mishaps nothing super bad happened, and I'm able to finish the upgrade ahead of schedule (to be fair, the maintenance window is intentionally huge, but still). Anyway, I learned quite a bit during the process and in the end everything worked out.

I know my homepage says there's a lot of stuff I need to write about. All in due time. Hopefully.