Automating wildcard certificate issuing in Kubernetes

Time for more janky setups! I did say I'm going to document some of the flailing that is me playing around with a baremetal kubernetes cluster. This time, I'm gonna talk about the hellscape that is automating DNS-01 ACME challenges for cert-manager.

Why are you doing this?

I've had wildcard certificates for a while now. I prefer wildcard certificates a lot since that means I don't need to grab new certificates whenever I create a subdomain and I can just add a config to my Apache webserver (DNS would resolve everything in *.rkevin.dev to my system (with a couple of exceptions) so I can do virtual host routing). It also helps since I have like 20 active virtual hosts now, some of which are hidden for sharing private files with friends or as solutions to puzzles (they have domain names like e166e01b-be51-4368-96e5-c36856cbaa9b.rkevin.dev, so they are unguessable. And no, that particular domain doesn't lead anywhere). I want to keep them hidden so people can't just figure it out through looking at the HTTPS certificate or looking up all the LetsEncrypt certificates issued for this domain on crt.sh.

However, I've been doing these certificates manually every 3 months, and it's getting annoying. The registrar I got this domain from doesn't have any automated APIs for updating DNS entries, and to get wildcard certificates I need to place a DNS TXT record on my domain to verify that I own it. Considering I've been playing around with the idea of using a baremetal k8s cluster for all my services, it's even more important that I try to automate this certificate issuing process somehow so my cluster can just take care of it.

The high-level setup

This is the tl;dr section. If you somehow got here from Google (hi internet!) because you want something like this on your own cluster, but want to figure out stuff yourself and not look at my exact configuration, here's the high-level overview.

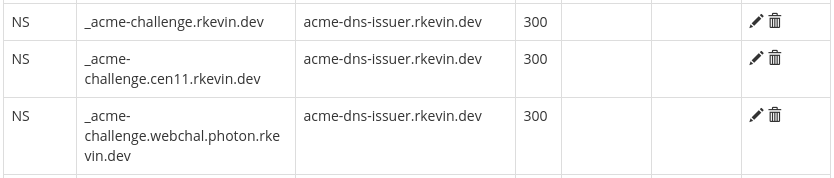

I modified the DNS records from my registrar so that _acme-challenge.rkevin.dev has its NS set to my server. I configured a BIND DNS server running

as a Kubernetes deployment and exposed its port 53 to the public, and configured it with a shared secret with cert-manager. I'm using

cert-manager to get certificates automatically, and I have a ClusterIssuer that deals with DNS01 challenges using

RFC2136 by talking to the BIND DNS server. This way, I don't have to run a proper

nameserver for my entire domain, but queries to _acme-challenge.rkevin.dev (and a couple others like _acme-challenge.webchal.photon.rkevin.dev for

ECS189M challenges on photon) will be routed to my server, forwarded to the k8s service that's BIND, and BIND can answer the TXT challenges since it's

configured by cert-manager with the right responses. The HTTP-01 challenges are dealt with normally using the default ingress, which is exposed on port

80.

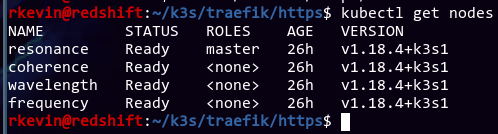

What I have beforehand

I setup a simple k8s cluster for me to play around with. It's using k3s, which is a lightweight k8s distribution aimed for IoT devices and works pretty well on ARM. Since I'm running it on 4 Raspberry Pis, this works perfectly.

I've already setup the cluster a couple months ago, but I broke a couple of things when playing around with Ingresses, so I ripped everything out and started fresh (hence the 26h age). Fortunately I kept all of the k8s objects I created so setting everything back up was a breeze.

I also have an NFS storage provisioner for persistent volumes. The NFS server runs on one of the pis and only has like 10GB of free space, but hopefully that's enough for now. Worst case scenario I can always move it.

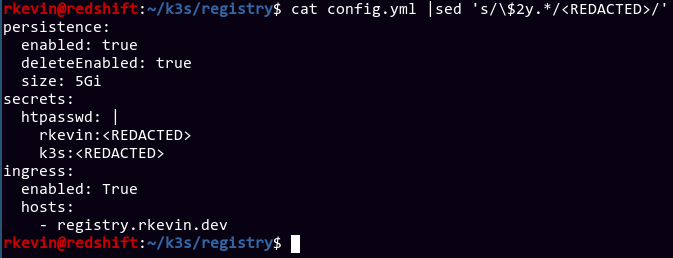

Along with this, I have a private Docker registry. I definitely need one since I know I'll be running a bunch of custom services and I don't want to

put them all on Docker hub. These are the values I used for the stable/docker-registry helm chart.

Side note about the registry, since Docker hates it when I use HTTP and not HTTPS and won't even let me login to the registry, I did a dirty hack where I use my regular Apache webserver (the one serving this site out right now, as of time of writing) to terminate HTTPS before sending it to my internal service. Hilarious how I plan on migrating everything to k8s so I can stop using Apache on a single server and manually write a ridiculous amount of Apache config files, and in the process I need to rely on Apache itself.

Finally, since I'm using k3s, some things are automatically installed for me. k3s comes with Traefik as an ingress controller, and a bare metal load

balancer that makes all services of type LoadBalancer behave a like NodePort, so I can easily tell my router to port forward to services I want to

make public.

Configuring Porkbun DNS entries

I've mentioned before that I got this domain from Porkbun, and I've had zero issues so far. However, they do not offer a way to

programmacally change DNS entries other than their web interface. Since I don't want to write a crappy web client that logs into the website (with 2FA

tokens as well), simulates mouse clicks and change the DNS entry, I have to look for another solution. I could change the authoritative DNS server to

point to me and control DNS for my entire domain, but considering my server has unpredictable downtime (heck, one of my servers has such an unstable

power supply it can fall apart by looking at it

the wrong way), I don't want to do that. I want to run a DNS server that only deals with those _acme-challenge DNS queries, and let Porkbun's servers

handl the rest. Google tells me (and also me digging into how ACMEDNS works, despite me not using ACMEDNS) that LetsEncrypt respects CNAMEs and NS

records, so I can use those to delegate answering to a DNS server that I actually control. Therefore, I created the following entries:

acme-dns-issuer.rkevin.dev resolves to 73.66.52.69, like everything else (since I have only one IPv4 address from my ISP and I'm running everything

from my apartment). With this in place, if I expose a DNS server on my port 53, any recursive DNS resolver that asks for the TXT record should go to my

DNS server for the record. Cool!

Installing cert-manager

This should be mostly the same as the installation guide on the website, but I did things a

bit differently. Since I stumbled a lot, I had reinstalled cert-manager a couple of times, and that caused some issues especially regarding the custom

resource definitions. I used to use the helm chart's installCRDs value to instruct helm to install the CRDs for me, but during uninstallation they

aren't cleaned up properly, and I eventually end up with a mess. It doesn't help that I didn't do it in the cert-manager namespace as instructed on

the tutorial, but rather a https-cert namespace for all the certificate related stuff. As a result, I imported the CRDs manually using the

cert-manager.crds.yaml from their installation docs, but

only after doing a search and replace to replace all occurrences of namespace: 'cert-manager' to namespace: 'https-cert' (there are 6 occurrences

in total). Then, I installed the helm chart without CRDs, and with an additional option extraArgs='{--dns01-recursive-nameservers-only}'. I will

explain this later on.

tl;dr:

1 2 3 | helm repo add jetstack https://charts.jetstack.io |

Creating the BIND server docker image

I chose BIND over something like ACMEDNS because I sort of know a little bit about it and how to configure it, even if I don't know a lot. Also, ACMEDNS had stuff like registering accounts, and that feels a) kinda complicated and b) I'm not sure where it keeps persistent account data, and that makes it troublesome to run it on k8s (I don't feel like giving it a proper PVC, and I also don't want the accounts to be destroyed on a reboot).

I built my BIND image using Alpine Linux, which is a nice small distro for this kind of thing. No seriously, the arm32v7/alpine:3.12.0 image is

literally 3.77MB. It boggles my mind how you can fit a complete libc, a proper package manager, along with some other libraries like libssl, on like

3 floppy disks. It's insane. Stares at that stupid Windows docker container I made a couple of weeks ago that's around 20GB in comparison. It's a

long story for another day.

This is my Dockerfile:

1 2 3 4 | FROM arm32v7/alpine:3.12.0 |

Plain and simple. I install BIND, copy over some config files, and when you start the container it reads the shared key from an environment variable

called TSIG_SECRET, then starts named.

The configuration file is based on the authoritative name server configuration file that came with the install. I changed the listen-on directive to

0.0.0.0/0 to listen on all interfaces (It actually took me a while to realize that 0.0.0.0 actually means no interfaces, and it was sort of a

pain to debug since BIND is happy to start with no listening interfaces.) The config file also comes with default directives that disable zone

transfers and recursive resolving.

Here are some directives I added to the bottom:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | key "cert-manager" { |

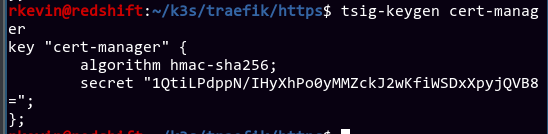

This adds a key called cert-manager that will be used by cert-manager to configure BIND using RFC2136, which is a protocol that can be used to tell a

DNS server to add/delete DNS entries. The key itself is currently a placeholder (TSIG_SECRET) that will get replaced by the actual value by sed in

the entrypoint. It also adds the zone rkevin.dev, and disable the control channel on port 953.

I also added a dummy.db with some default entries, mostly just an SOA entry that placates BIND so it actually starts:

1 2 3 4 5 6 7 8 9 10 | $ORIGIN rkevin.dev. |

There's also another stupid entry there, but that's for me to know and for you to find out. If you do, let me know!

In any case, I finally build the docker image using docker build -t minimal-bind ., and once I've tested it locally I used docker tag

minimal-bind:latest registry.rkevin.dev/minimal-bind:1.1 and docker push registry.rkevin.dev/minimal-bind:1.1 to add it to my own registry. The

version is 1.1 because I fixed some tiny bugs from 1.0.

Side note, I used some QEMU magic to run armv7l binaries on my x86-64 laptop, which allows me to build docker containers on my own machine. I forgot the exact way I did it (it was a loooong time ago), but Google should give you some good results.

Deploying BIND on the cluster and exposing it to the public

I created the shared secret between cert-manager and BIND using tsig-keygen. It should give you something like this (don't worry, this isn't the

secret I'm currently using, it's just an example:

The base64 in quotes is what we want. I then created a kubernetes secret for the key:

1 2 3 4 5 6 7 8 | apiVersion: v1 |

It's finally time for us to deploy the application:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | apiVersion: apps/v1 |

The TSIG_SECRET environment variable will be populated from the acme-named-key we just created. Our docker entrypoint will use sed to add this to

our config file, then start named. Nice!

Finally, we need a k8s service to expose this deployment to the public:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | apiVersion: v1 |

I chose port 5791 because why not. Using k3s, the LoadBalancer type behaves kinda like NodePort, so I can just expose port 5791 on one of my nodes to the public. My router is using OpenWRT, so I just added these blocks to the configs:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | config redirect |

Why I have 3 blocks is sort of a long story, but the tl;dr is I have separated my LAN into two VLANs: lan and lan_servers. My and my housemate's

devices that are connected to WiFi are in the first VLAN, while all my Raspberry Pis and other server stuff are in the second VLAN. lan_servers may

not initiate connections to the lan VLAN. This is just for basic protection. Hence, I needed 3 blocks to route traffic from WAN and 2 VLANs to the

right destination.

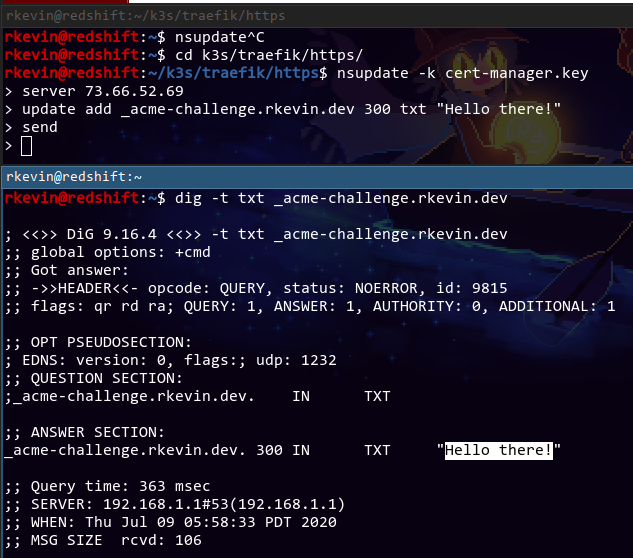

AAAAAAnyway, after all that, the BIND DNS server is complete! We can add an entry using nsupdate manually to verify it works, and it sort of does*.

*: DNS takes a ridiculously long time to propagate. More on that later. This test is done like at least 15 hours after the NS entries are configured.

Nice. We can then remove the entry using update remove _acme-challenge.rkevin.dev txt and send.

Configuring the ClusterIssuer

For cert-manager to automatically create certificates, we need to tell it how to. This is usually done using an Issuer or ClusterIssuer. The only

difference is ClusterIssuer is cluster-wide, while Issuer is tied to a namespace. It doesn't matter for me, so I opted for a ClusterIssuer

because why not. I configured the issuer to deal with HTTP-01 challenges using the default ingress (Traefik, whose port 80 is already exposed) and

DNS-01 challenges using RFC2136, which we have just setup.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | apiVersion: cert-manager.io/v1alpha3 |

Creating the certificate

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | apiVersion: cert-manager.io/v1alpha2 |

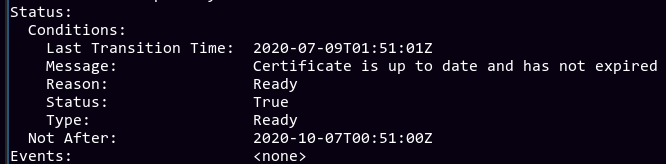

This creates the Certificate object. cert-manager should automatically detect its existence and try to issue such a cert. It might help debugging

if you also do a kubectl logs -f on the cert-manager pod. After some trial and error with the configs, we can see it successfully issued the

certificate!

If we check the corresponding secret primary-cert-secret, we can see the full certificate. This can then be used for Traefik, although I haven't

figured out how to configure it yet.

Note about DNS propagation delay

While this article made it look straightforward, the debugging process was pretty painful. The main reason was actually DNS propagation delay of the NS

records. When I had things setup pretty much as this article mentioned, the DNS TXT queries won't show up when I'm using certain resolvers like

cloudflare. It doesn't help that I technically have like 5 DNS servers lying around (tldr: computer's systemd-resolved cache, the k8s CoreDNS, which

both use my router's dnsmasq DNS as upstream, which uses a pihole as upstream, which uses an unbound server as upstream, which uses CloudFlare DNS),

and the resolving would work on some of them but not others. Also, when using dig on several public recursive resolvers, I get different results. At

one point, using 75.75.75.75 (Comcast's resolver) gave me the TXT record, but both Cloudflare (1.1.1.1) and Google (8.8.8.8) didn't. Asking Cloudflare

to clear the DNS cache on my domain did nothing. Later on, the Google DNS magically got fixed, and I assumed it was a configuration/firewall fix on my

end. I actually thought my NS record setup is triggering some sort of bug and it's implementation dependent whether it gets resolved correctly or not.

After sleeping and ignoring the setup for a dozen hours or so, the issue just magically fixed itself, so I think it is just DNS propagation after all.

That's partially the reason why I added the --dns01-recursive-nameservers-only to the command line extraArgs when installing the helm chart for

cert-manager, in an attempt to narrow down the issues. I don't think I need it now, but I already have it installed this way, so I'm fine leaving it

as-is.

That's all I have for now. This webserver is currently still served from my Apache webserver, but I should be able to migrate my static sites and a bunch of other stuff to Traefik soon. See you then!